A modern data infrastructure with automated pipelines typically requires the use of many different tools for integration, modeling, orchestration, monitoring, and visualization. Managing these tools takes time and expertise, which can be costly — and they don’t ensure the creation of accessible, reliable, and production-ready data pipelines. Outdated and disconnected data systems are still common in many organizations as a result, causing trouble in accessing and processing information.

Overall, two core challenges for modern data infrastructures are accessibility and scalability. A data pipeline is considered accessible and scalable when it can handle large volumes of data and requests in an efficient manner and adapt to changing needs while providing easy access to data on demand.

In the worst-case scenario, inaccessible and unscalable data pipelines result in slow and inefficient decision-making, missed business opportunities, and decreased competitiveness. Employees might spend hours manually collecting and cleaning data or lack critical information when having to make decisions. The consequences of having an inefficient data infrastructure can reverberate throughout the organization, hindering its ability to stay ahead in a rapidly changing marketplace.

Common accessibility issues in data pipelines

Accessibility refers to the degree to which data or information is available and easily accessible to authorized users, regarding both the location of the data (whether in a dashboard, warehouse, or data model) and the usability of the data stack it stemmed from. A lack of accessibility in data pipelines can cause significant issues that hinder an organization’s ability to leverage its data effectively. The following are the most prominent problems:

Siloed data

When data is stored and processed in disparate systems or tools, it can be challenging to bring it all together for analysis and decision-making.

Lack of standardization

Without standardization in data naming conventions, formatting and metric definitions, and storage, it can be difficult for different teams to access and understand the data.

Security and privacy concerns

Data pipelines may contain confidential data, making security and privacy a significant concern that necessitates protection against unauthorized access. However, accessibility issues can arise when authorized users encounter challenges in using the data or when inadequate security measures result in unauthorized individuals accessing protected information.

Technical complexity

Data pipelines can be complex to set up and maintain, requiring specialized technical expertise as well as data literacy to leverage them. Data teams may sometimes struggle to understand the pipeline’s intricacies.

Data latency

When data is not regularly processed, it can become outdated. This can hinder the ability of teams to use timely and accurate data.

Lack of transparency

When data pipelines are poorly documented and monitored, it can be difficult to understand how the data is being processed and where it comes from.

These accessibility issues can make it challenging for organizations to effectively leverage their data. Locating and using relevant data can be difficult for data teams if it’s not easily accessible or properly documented. This slows the analysis process and reduces the accuracy of insights. Business opportunities can be missed as a result and suboptimal decision-making can occur as data teams may not be able to inform key decisions. Therefore, it’s essential to address these challenges to ensure that data pipelines are optimized for performance, reliability, and accessibility.

Common scalability issues in data pipelines

Scalability can be defined as a data management system’s ability to handle increasing volumes of data and processing requirements without sacrificing performance, reliability, or cost-effectiveness. As data requirements and volumes grow, scalability becomes a critical concern. Without a scalable data pipeline infrastructure, organizations risk facing various issues that can limit their ability to process data efficiently and make informed decisions. Here is a list of the specific problems that can arise from a lack of scalability:

Inflexibility

As data requirements change over time, the data pipeline infrastructure must be flexible enough to accommodate new data sources, data types, and processing needs. For example, imagine that a team wants to add a new data source to their pipeline: comments from social media. Issues may arise if their infrastructure is not flexible enough to handle unstructured data.

Lack of automation

Manual processes in a data pipeline can become time-consuming and error-prone when the volume of data or requests grows.

Inefficient data management

Data duplication, fragmentation, inconsistency, and loss can all result from suboptimal data handling throughout the data’s lifecycle, from collection to disposal.

Integration challenges

Challenges may arise due to various factors, such as incompatible data formats, data quality issues, and technological differences between the new and existing data sources or processing tools. Integrating new data sources or processing tools into a non-scalable data pipeline can take time and effort, leading to increased architecture complexity and processing time.

As a business grows, scalability issues can significantly impact data teams. They can cause data processing to slow down and delay insights and decisions, which can negatively impact the performance of the organization as a whole. Furthermore, data teams may face challenges in integrating new data sources or data types, potentially limiting their ability to generate comprehensive insights and leading to a loss of confidence in the organization’s data and analytics capabilities.

However, careful preparation and execution can solve these accessibility and scalability issues. The steps in the following sections of this article will help you design an accessible and scalable production-ready data pipeline.

How to build an accessible data pipeline

Although the efforts required to build an accessible data pipeline may seem daunting at first, it is crucial to recognize the significant long-term benefits that can be derived from them. A well-designed and accessible data pipeline enables data teams to efficiently and reliably collect, process, and analyze data, accelerating their medium- to long-term workflow and facilitating informed decision-making. Here are the steps to build an accessible data pipeline:

Define your data requirements

Identify your organization’s data sources, types, and processing needs to help determine how data will flow through the pipeline. This will ensure that data is stored and routed logically and consistently.

Implement standardization

Establish standard naming conventions, formatting, and storage for your data. This not only makes it easier for teams to find and access data but also reduces the risk of errors or misinterpretations due to inconsistencies. Standardization can also simplify the process of integrating new data sources into the pipeline.

Ensure security and privacy

Implement security measures such as encryption, access controls, and data masking. This helps prevent data breaches and illegal data access, both of which may cause substantial harm to a business. In addition, the pipeline becomes more accessible to authorized users while remaining compliant with applicable data protection rules.

Choose the right technology

Choose a unified data stack with an intuitive UI and access control features to

- Ensure that your team members can use your data tool regardless of their data literacy level.

- No longer depend on expensive data engineers to set up your data infrastructure.

- Make sure that only relevant users have access to the data they need.

Automate processes

Automating manual processes in a data pipeline can lead to more efficient and reliable data processing. For example, automating tasks such as data ingestion, cleaning, and transformation can reduce the potential for human error and save time. Other processes that can be automated include data validation, testing, and deployment, which can help ensure the accuracy and reliability of the data pipeline. Automating processes can also free up time for data teams to focus on more complex tasks like data analysis and modeling, which can lead to better insights and decision-making.

Monitor and document the pipeline

Monitor and document the data pipeline to ensure that it’s functioning correctly and make it easier to troubleshoot and make improvements. Documentation also allows new team members to quickly understand the pipeline and its processes.

Data teams need to prioritize assessing and improving accessibility to ensure the pipeline remains efficient and effective. This involves regularly evaluating the pipeline’s user-friendliness and comprehensibility, tracking its performance metrics, and identifying and addressing any accessibility issues throughout its lifecycle.

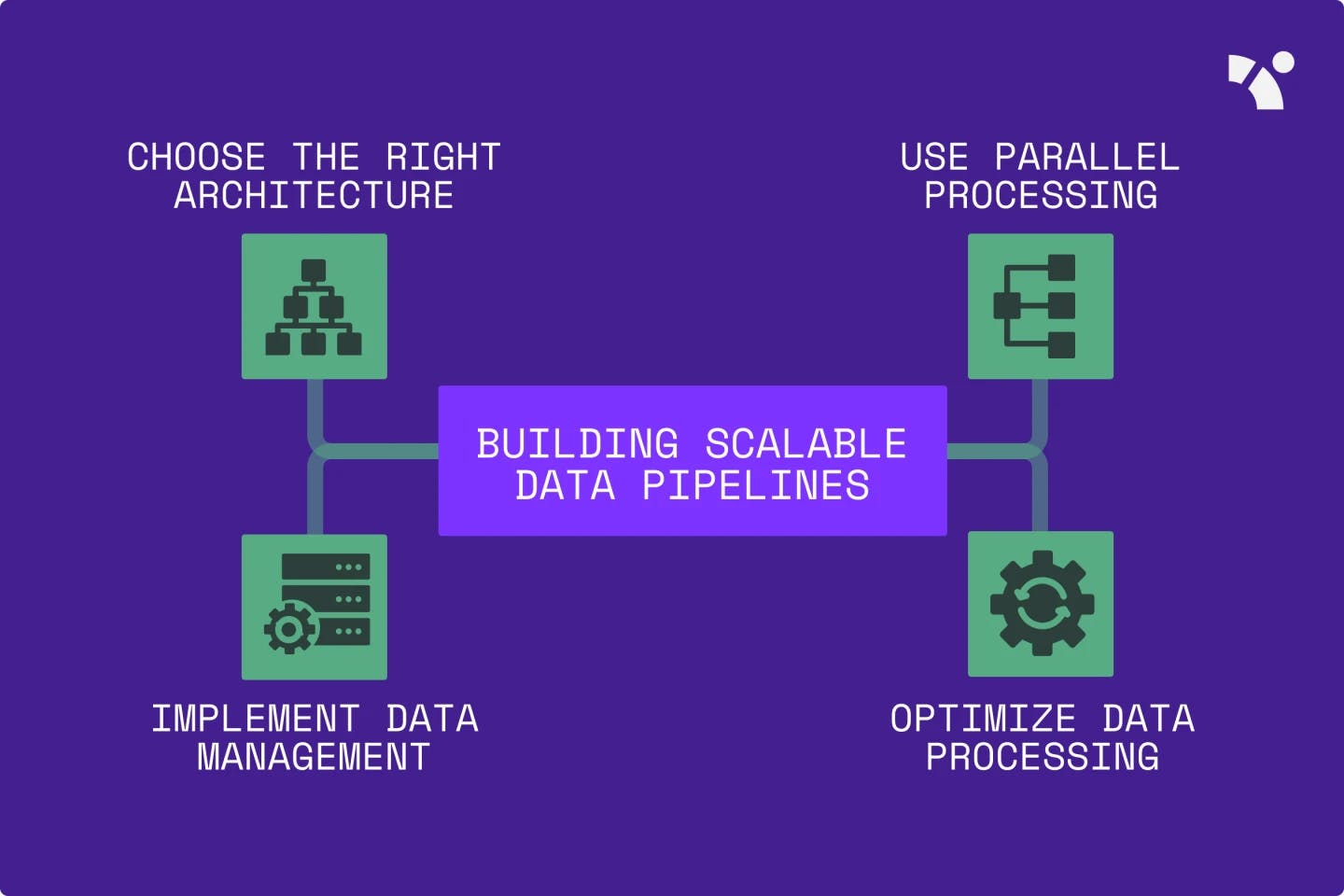

How to build a scalable data pipeline

Building a scalable data pipeline, like fixing accessibility concerns, takes time and work to begin with. Still, it’s well worth it as the organization grows. Here are the steps to take to ensure the data pipelines you create are scalable:

Choose the right architecture

Select a flexible architecture that fits your organization’s data processing needs. A scalable architecture can be thought of in terms of its ability to handle increasing amounts of data or processing requirements without needing major changes or causing performance issues. This can involve using distributed systems that allow for horizontal scaling by adding more nodes to the system as needed or cloud-based solutions that provide scalable infrastructure on demand. The architecture should also be flexible enough to accommodate changes in data sources or processing needs over time.

Implement data management

Implement a data management strategy based on the specific needs and goals of your organization, the data types and sources you are working with, and the types of analysis or processing you will be performing on that data. For example, if you have a large volume of structured data that needs to be analyzed for business intelligence purposes, a traditional data warehousing approach may be suitable. On the other hand, if you are dealing with unstructured data such as social media feeds or sensor data, a data lake approach may be more appropriate. A data lake allows you to store large volumes of data in its native format, which makes it easier to handle and process data that may be of varying quality and formats.

Use parallel processing

Implement parallel processing techniques to increase your data pipeline’s processing capacity. Parallel processing involves breaking a task down into smaller sub-tasks that can be executed simultaneously. For example, suppose a data pipeline is designed to process a large amount of data. In that case, you might need to split the data into smaller chunks that can be processed in parallel by multiple processors.

Optimize data processing

Optimize data processing techniques to reduce processing time and improve performance. There are several ways to optimize data processing, such as minimizing the number of data transformations, selecting faster algorithms, reducing data movement, leveraging caching and in-memory processing, compressing data, and doing incremental updates instead of re-computing historical data.

A pipeline optimized for scalability will efficiently process large volumes of data in real time and adapt to future needs and requirements. In this way, it will improve the data team’s efficiency, flexibility, and ability to empower business users to make informed data-driven decisions.

Design production-grade data pipelines with Y42

At Y42, we are committed to addressing the accessibility and scalability issues that organizations face when managing their data. By leveraging Y42’s Modern DataOps Cloud, teams can quickly and easily prototype and deploy production-ready data pipelines, saving their data engineers valuable time.

Y42 offers a unified and accessible solution. Our integrated web app, API, and CLI, as well as our orchestration feature and data warehouse processing, simplifies the production and management of data pipelines.

Category

In this article

Share this article